Stereo imaging relies on introducing parallax between two photographs of the same subject. Parallax is a lateral change in observation position meant to simulate the slightly different view seen by each eye. The brain combines these two separate views resulting in stereopsis or the ability of the viewer to perceive a sense of depth. In stereo imaging, parallax between images can be achieved either simultaneously or sequentially. Simultaneous stereo uses two cameras or a single camera with a double lens array to achieve stereo separation at the same time.

A stereo slit-lamp with dual cameras & beamsplitter is an example of a simultaneous setup.

Sequential stereo uses a single camera and relies on a lateral shift of camera position between two successive frames. This is the technique most commonly used for stereo fundus photography. A common question in stereo imaging is how far to shift the camera between sequential views. There are a number of conventions used to determine an appropriate shift distance.

The simplest method for general pictorial photography is to separate the images by about 65 mm, which is close to the average lateral distance between eyes. The left/right images above were separated by a lateral distance of about 2.5 inches or 65mm.

Anaglyph stereo image of above pair. Use red/cyan anaglyph glasses with red lens over left eye.

Another common convention is to measure the distance between the camera and the nearest point in thescene and divide that distance by thirty. Neither of these methods accounts for the focal length of the lens, or subjects that are extremely close or so far

from the camera that the shift is inadequate or impractical.

There are additional formulas and calculations to compensate for unusual situations, but they may also be inadequate in some imaging situations. Ophthalmology and space exploration are two fields where stereo imaging is routinely used but the subjects fall at such extremes that they don’t fit into the common stereo “rules”.

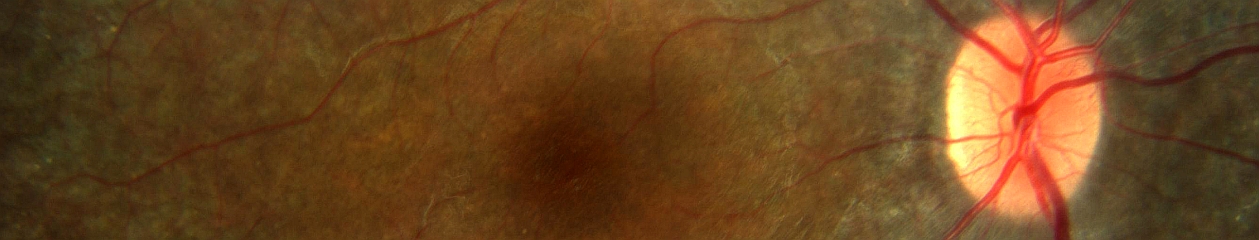

Fundus photography is a unique exception to the rules in that it incorporates the optical properties of the subject eye into the stereo/parallax equation. One could argue that a working distance of about 100mm from the front element of the fundus camera to the subject eye would require a shift of 3-4mm to create a stereo effect close to the 1/30th standard. This ignores the fact that although the subject is indeed very close to the camera, the focus is actually set at distance to compensate for the optics of the subject eye. The reason we get a substantial stereo effect in this scenario is not due to the 1/30 rule, but from cornea-induced parallax as described by Lee Allen in 1964. During the lateral shift between frames, the image-forming rays fall on opposite slopes of the cornea, increasing parallax and creating a hyperstereoscopic effect.

Space exploration also presents some interesting challenges and opportunities in stereo imaging. The National Aeronautic and Space Administration (NASA), the European Space Agency (ESA) and other space agencies routinely produce stereo images.

NASA’s Mars Rover Exploration mission uses simultaneous stereo cameras mounted on the rover vehicles to aid in navigation and create stereo images for analysis of the landscape of Mars. Image Credit: NASA/JPL-Caltech.

The ESA has used a handheld simultaneous camera on board the International Space Station. These stereo imaging devices can adhere to the basic rules of parallax because of the short distances to the subject. But what about some of the stereo images of distant space objects presented by the space agencies?

The Lunar and Planetary Institute’s 3-D Tour of the Solar System demonstrates stereo images taken with a variety of techniques. Some are “fly-by” opportunities where space craft capture sequential images. In these instances, the images may be spaced hours, days or weeks apart. In 1980, Voyager spacecraft captured sequential stereo images of Dione and Rhea, the moons of Saturn during a long-distance fly by. The images of Rhea were separated by more than 100,000 kilometers! For this method to work the planet or moon must not change significantly between exposures. Cloud movement or changes in shadow length may compromise a stereo pair if the time between exposures is too long.

Long distance simultaneous stereo images are captured by NASA’s STEREO (Solar TErrestrial RElations Observatory) program which employs two nearly identical space-based observatories – one ahead of Earth in its orbit, the other trailing behind – to provide stereoscopic measurements of the Sun.

The STEREO observatory obtained these images of the sun from two different satellite cameras at the same time in a simultaneous capture. Image Credit: NASA/JPL-Caltech/NRL/GSFC

Anaglyph stereo image of above pair. Use red/cyan anaglyph glasses with red lens over left eye. Image Credit: NASA/JPL-Caltech/NRL/GSFC.

In addition to these basic stereo capture concepts there is yet another lesser-known sequential imaging technique that works well for certain subjects. In this case, the camera remains stationary but the subject moves or rotates to achieve parallax between sequential images. The Solar and Heliospheric Observatory (SOHO), a collaborative venture between ESA and NASA, captures images of the sun every few hours with its Extreme ultraviolet Imaging Telescope (EIT). SOHO is locked in an obit directly between the earth and sun – essentially a fixed position in relation to the sun. The sun completes a full rotation once every 27 days. SOHO’s telescopes capture images of the sun at the same wavelengths every 6 hours as it slowly rotates. This results in just over three degrees of rotation between sequential frames introducing enough parallax to create a rotational stereo effect. These images appear very similar to the simultaneous stereo pairs captured by STEREO with two cameras.

These anaglyph images of a globe demonstrate the rotational stereo effect. The left anaglyph stereo image was taken with a lateral shift of 2.5 inches between sequential frames. The right anaglyph image was created by slightly rotating the globe between sequential frames with the camera in a stationary position. Both techniques produce a stereo effect when viewed through red/cyan anaglyph glasses (with red lens over the left eye). Rotational stereo is not a new concept. In 1948, McKay describes rotary parallax as being especially useful for closeup or stereomicrography.

Which brings us back to ophthalmic imaging. Can rotational stereo be applied to ophthalmic subjects? Rotational stereo is actually quite useful for slit-lamp stereo imaging of the eye with setups where there is only one camera mounted on the slit-lamp. And why not? The eyeball is often referred to as the “globe” – not unlike the sun.

Here are two sequential frames taken with the patient’s head and the slit-lamp in stationary positions. The patient’s fixation was directed slightly to the left after the first image was captured causing the eye to rotate a few degrees before capturing the second image to create the anaglyph stereo pair seen below.

As you can see this technique does effectively induce enough parallax to create stereo pairs of closeup subjects in ophthalmology. Take a look with anaglyph glasses to appreciate the stereo effect.

The next time you see an interesting subject at the photo slitlamp, think about stereo imaging of celestial bodies and try rotating the “globe” between frames.

This YouTube video demonstrates the rotational stereo effect. I downloaded 27 days worth of images from SOHO, created stereo pairs and animated the series to show one full rotation of the sun in anaglyph stereo. It was constructed from 108 separate images each taken 6 hours apart with the Extreme Ultraviolet Imaging Telescope.